Nurses in New York are raising serious concerns about AI tools being quietly rolled out in hospitals without their input. These healthcare workers say the technology threatens their job security and could compromise patient care. While hospital administrators see AI as a way to cut costs and improve efficiency, nurses on the front lines argue that patient care requires a human touch that algorithms simply cannot provide.

This tension between cost-saving goals and quality care has reached a breaking point. Hospital systems in New York have been integrating AI-powered tools behind the scenes, leaving many nurses feeling blindsided. The question is no longer whether AI will transform health care, but whether that transformation will happen with or without the people who actually deliver care every day.

Hospital Systems Roll Out AI Without Nurse Input

Hospital administrators have been implementing AI tools without transparent communication or collaboration with nursing staff. Professional nursing organizations insist that AI should support nurses, not replace them, and that nurses must be involved in developing these systems. Without this collaboration, hospitals risk creating tools that are disconnected from the realities of patient care.

The lack of transparency has sparked fierce opposition from nursing organizations and local politicians. They argue that decisions affecting patient safety and thousands of healthcare jobs should not be made behind closed doors. When the primary goal is cost reduction, the human element of care often gets sacrificed first.

The Real Risks of Artificial Care

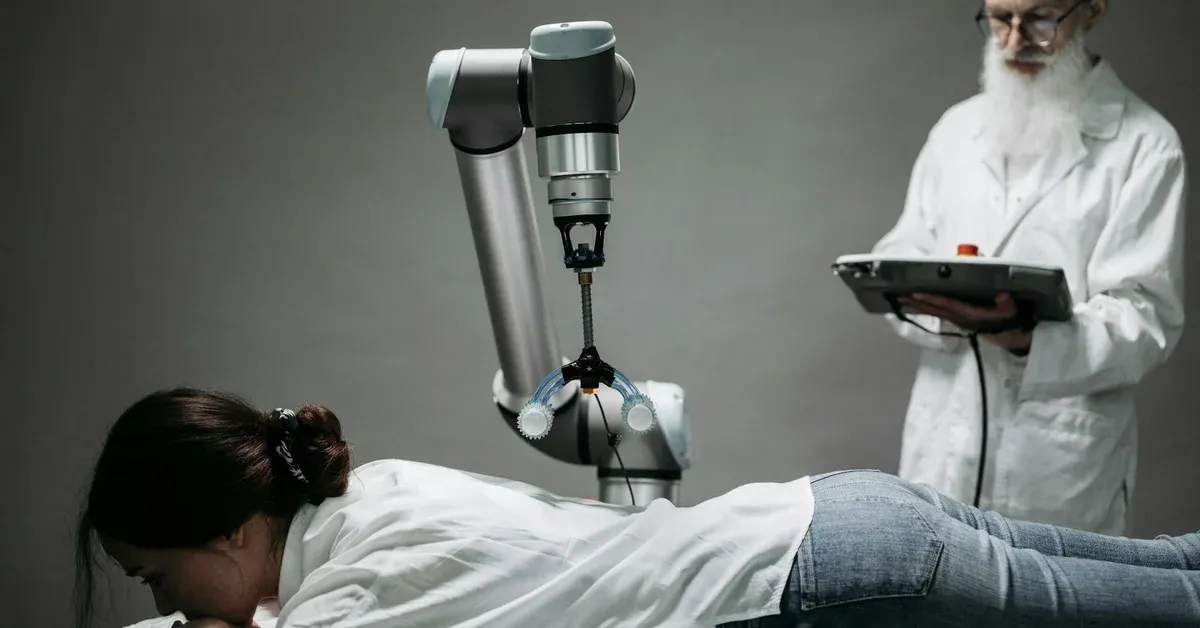

Nurse leaders in New York have coined the term artificial care to describe their concerns. They worry that relying on AI for critical patient monitoring introduces new opportunities for errors and bias. While language models and AI systems excel at processing data, they struggle with the unpredictable and deeply personal aspects of patient care that nurses handle every day.

The concerns go beyond job security. Nurses report that automated systems make mistakes, amplify biases in data, and create additional work for staff who must correct these errors. When AI systems fail in a hospital setting, the consequences are far more serious than a software glitch. This echoes previous AI ethical failures, such as AI’s Morality Meltdown: Fake Disability Influencers Hijack Social Media, where innovation clashed with ethical responsibility.

Cost Cutting Versus Patient Care

The conflict comes down to a familiar tension: hospital administrators focused on budgets versus nurses committed to their patients. From an executive perspective, AI promises to address resource strain and eliminate inefficiencies. Automated documentation, mobile platforms, and tech-enabled services are sold as solutions to financial challenges.

For nurses, these resource-saving solutions feel like an attack on their ability to provide compassionate care. They see the reduction of human interaction as a dangerous trade-off where financial concerns override patient needs. Legislators with nursing backgrounds are demanding that hospitals invest in people, not unproven technology. The human need for empathy cannot be outsourced to an algorithm.

Building a Better Future for Healthcare

The way forward requires a complete shift from quiet implementation to genuine collaboration. Medical experts agree that AI has potential to improve efficiency and enhance patient experiences, but only with strong oversight, quality data, and buy-in from organizations, practitioners, and patients.

Nurses must be involved as co-creators, not just end-users, of AI solutions. Their understanding of practice realities and patient needs is essential for developing tools that truly help rather than hinder care. Without their involvement, AI risks becoming artificial care that magnifies biases and undermines safety, as reported by the New York Post.

Even in lower-stakes environments, AI reliability is questionable. Chatbots are known for making up academic citations. In healthcare, the stakes are much higher. Some tech founders have recognized these risks, with one choosing to shut down an AI therapy app over safety fears.

The future of healthcare does not have to be humans versus machines. It can be a partnership, but only if the humans providing care are respected and prioritized at every step.