A U.S. citizen was wrongly flagged by ICE’s facial recognition technology twice, raising serious questions about how government agencies rely on flawed AI for identity verification. The woman, trying to check her immigration status, was confronted by ICE agents who insisted their mobile app had made a positive match, despite showing her birth certificate as proof of citizenship. This case highlights a troubling reality: systems marketed as definitive tools for recognition are failing in ways that could upend innocent lives.

The app, Mobile Fortify, compared her face against a database of 200 million images and came back with the wrong name. Even when presented with official documents, agents doubled down, calling the AI’s determination immigration status “definitive.” It’s a stark example of technology overriding common sense and due process.

When Facial Recognition Gets It Wrong

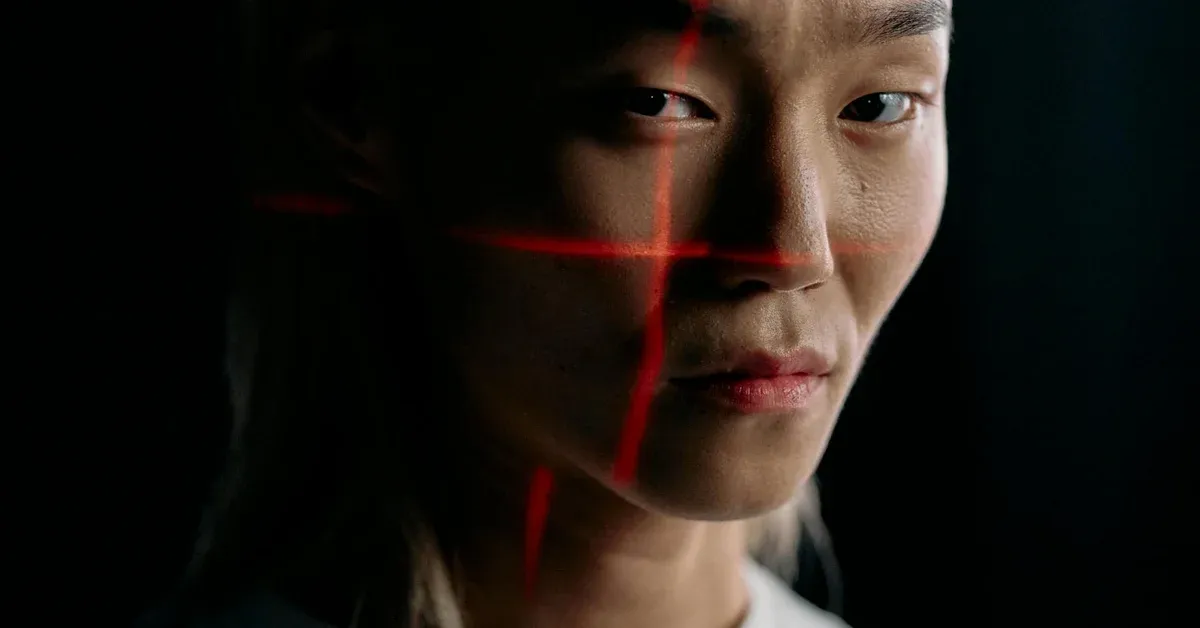

This incident exposes the darker side of biometric surveillance. Mobile Fortify, used by Immigration and Customs Enforcement and Customs and Border Protection, lets agents scan faces in public spaces using just a phone. The promise is instant identification and access to personal data. The reality is a system that struggles to tell people apart accurately, especially across different demographics.

Facial recognition technology has well-documented accuracy problems. It performs worse on women, children, and people of color, making misidentification more likely for these groups. When a machine’s judgment trumps a citizen’s own birth certificate, we’ve crossed into dangerous territory. The idea that such flawed tech could provide a definitive determination about someone’s identity or immigration status isn’t just wrong, it’s reckless.

Lawmakers Push Back on Surveillance Creep

Lawmakers are finally taking notice. The top Democrat on the House Homeland Security Committee recently introduced legislation to curb the Department of Homeland Security’s use of mobile biometric surveillance. The proposed Realigning Mobile Phone Biometrics for American Privacy Protection Act would prohibit DHS from using apps like Mobile Fortify outside designated ports of entry.

The bill would also require destruction of biometric data collected from U.S. citizens and mandate removal of these applications from departmental systems that aren’t at border crossings. It’s a legislative attempt to pull back the expansion of surveillance tools that lack proper oversight. This comes as mounting public concern grows over agencies deploying facial recognition and fingerprinting tech in public spaces without accountability.

The timing matters. When AI systems start making errors with serious consequences, like AI Chatbots Are Making Up Academic Citations, we need to question their deployment in high-stakes situations.

The Technology Isn’t Ready for This

The core problem is the technology itself. Facial recognition isn’t the magic solution agencies claim it to be. These systems are susceptible to biases in their training data, leading to persistent failures in recognition accuracy. Experts consistently point out these flaws, yet agencies with enormous power continue deploying them as if they’re infallible.

Handing flawed technology to law enforcement agencies, then expecting perfect outcomes, is a recipe for disaster. The potential for a quick scan to destroy someone’s life is real. As reported by 404Media, this woman’s experience with ICE’s app shows exactly what can go wrong reported by 404Media. It echoes broader concerns about digital identity manipulation, similar to when Yearbook Photos Turned Into Deepfake Porn by AI became a problem.

Privacy Rights Versus Surveillance Efficiency

Beyond individual cases, the implications for privacy and civil liberties are massive. The push for AI-driven surveillance risks eroding fundamental rights without proper public consent or oversight. When government agencies claim they need to know who people are at all times, the power balance shifts dramatically. Public spaces become digital dragnet zones.

Even as lawmakers work to curtail ICE’s biometric use, as seen in recent tech bills of the week, the fundamental issue remains. Agencies remain attracted to AI as a definitive solution for identification, despite clear evidence these tools fail regularly.

These systems need rigorous accountability, transparency, and healthy skepticism. The conversation should shift from how smart these systems are to whether their implementation can ever be ethical and just. If ICE Agents Keep Using Banned Chokeholds Despite Policy, trusting them with fallible facial recognition technology seems optimistic at best. The misidentification of a U.S. citizen twice by the same app should be a wake-up call, not just another data point.